I continue to be interested in this new AI technology called “Large Language Models” (LLMs)

Large language models are AI tools that can perform a wide variety of tasks. They can be used for both search and classification based on the meaning of the text and generating human-like text.

They were trained by analyzing massive amounts of text from Wikipedia and Reddit.

I think they are interesting for three reasons:

Natural Language Interface

First, you can interact with the text generation capabilities using natural language instead of code.

For example, you can ask it, “Translate this sentence into French:” or “Summarize this news article:”.

This is a new way of coding, where you can use English language to perform valuable tasks instead of writing code.

There’s exciting research in this area, such as Google’s “PromptChainer,” which lets you build AI-enabled apps by chaining together these English commands using a visual interface.

AI-enabled “no code” interfaces like this could allow non-programmers to perform useful tasks and create powerful applications.

Generality and Emergence

Second, LLMs extremely general and can perform a wide variety of tasks, even things they weren’t originally trained to do. Earlier AI models could only perform tasks they were explicitly trained to do, like summarization or translation. GPT-3 can do things like multiply numbers, even though it wasn’t trained to do multiplication.

These LLMs improve and gain new abilities as they are trained on more text. So as you feed them more data, they become more accurate and can do new types of tasks.

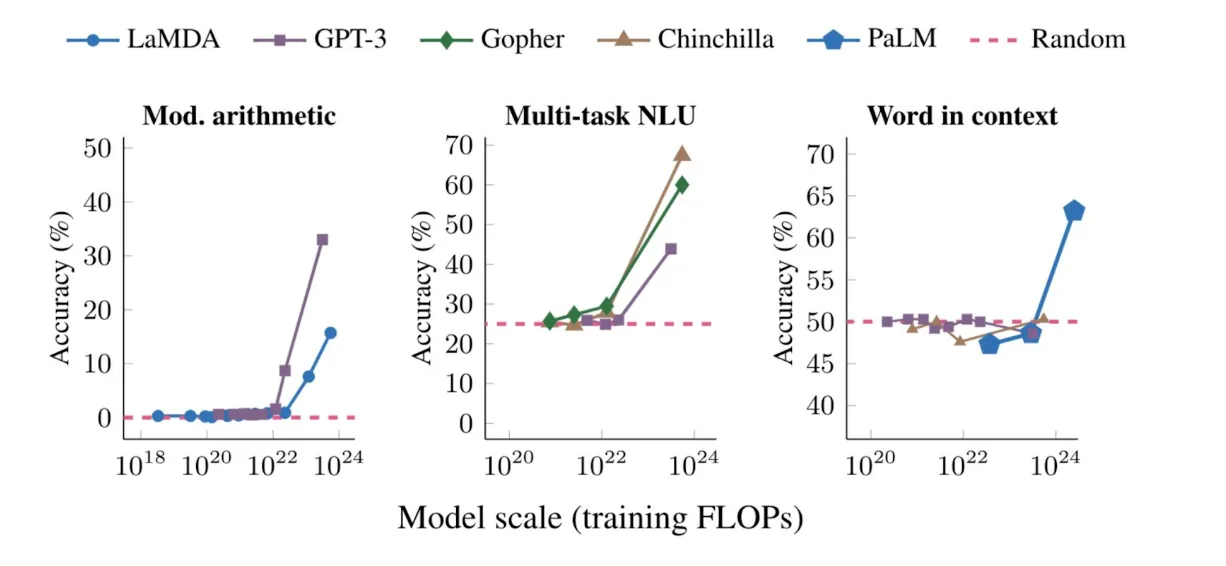

This behavior is called “emergent abilities”, whereas a model becomes larger, it can do things smaller models can’t do.

For example, the ability to perform tasks like arithmetic and answering college entrance exam questions only “emerge” after you feed the AI a certain amount of data.

This opens up the exciting possibility that by feeding AI more data, it can gain new abilities unforeseen by its creators. The recent advancements in computing performance allow us to generate AI models that are much, much larger than before.

We see similar behavior in “reinforcement learning,” like Google’s Alpha Zero Go board game engine. Instead of experts building strategies for board games, like a database of optimal opening and endgame moves, these systems teach themselves by playing millions of games against themselves. This allows them to develop strategies the creators couldn’t have imagined.

Semantic Search and Classification

Third, in addition to generating text, these models can also be used for search and classification. Instead of just searching for words that appear in the text, they can find text with similar meanings.

This “semantic search” technology is one of the reasons Google search is so good, and you can find what you’re looking for even if the exact phrase doesn’t appear in the text. Until recently, this tech was only available inside large tech companies, but it’s finally becoming more accessible.

Since the AI understands the meaning of text, it can also be used to group texts and discover hidden relationships between them.